Designing scalable, distributed systems involves a completely different set of principles and paradigms when compared to regular monolithic client-server systems. Typical large distributed systems of Google, Facebook or Amazon are made up of commodity servers. These servers are expected to fail, have disk crashes, run into network issues or be struck by any natural disasters.

Rather than assuming that failures and disasters will be the exception these systems are designed assuming the worst will happen. The principles and protocols assume that failures are the rule rather than the exception. Designing distributed systems to accommodate failures is the key to a good design of distributed scalable systems. A key consideration of distributed system is the need to maintain consistency, availability and reliability. This of course is limited by the CAP Theorem postulated by Eric Brewer which states that a system can only provide any two of “consistency, availability and partition tolerance” fully,

Some key techniques in distributed systems

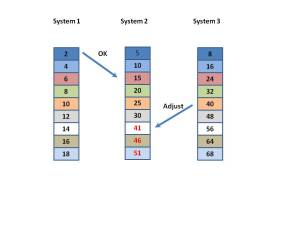

Vector Clocks: An obvious issue in distributed systems with hundreds of servers is that each server will have its own clock running at a slightly different rate. It is difficult to get a view of a global time considering that each system has slightly different clock speeds. How does one determine causality in such a distributed system? The solution to this problem is provided by Vector Clocks devised by Leslie Lamport. Vector Clocks provide a way of determining the causal ordering of events. Each system maintains an array of timestamps based on its own internal clock which it keeps incrementing. When a system needs to send an event to another system it sends the message with the timestamp generated from its internal array. When the receiving system receives the message at a timestamp that is less than the sender’s timestamp it increments its own timestamp by 1 and continues to increments its internal array through its own internal clock. In the figure the event sent from System 1 to System 2 is assumed to be fine since the timestamp of the sender “2” < “15. However when System 3 sends an event with timestamp 40 to System 2 which received it timestamp 35, to ensure a causal ordering where System 2 knows that it received the event after it was sent from System the vector clock is incremented by 1 i.e 40 + 1 = 41 and System 2 increments at it did before, This ensures that partial ordering of events is maintained across systems.

Vector clocks have been used in Amazon’s e-retail website to reconcile updates. The use of vector clocks to manage consistency has been mentioned in Amazon’s Dynamo Architecture

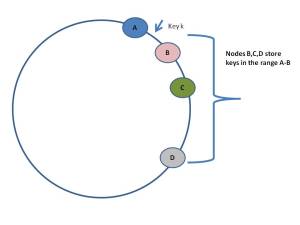

Distributed Hash Table (DHT): The Distributed Hash Table uses a 128 bit hash mechanism to distribute keys over several nodes that can be conceptually assumed to reside on the circumference of a circle. The hash of the largest key coincides with the hash of the smallest key. There are several algorithms that are used to distribute the keys over this conceptual circle. One such algorithm is the Chord System. These algorithms try to get to the exact node in the smallest number of hops by storing a small amount of local data at each node. The Chord System maintains a finger table that allows it to get to the destination node in O (log n) number of hops. Other algorithms try to get to the desired node in O (1) number of hops. Databases like Cassandra, Big Table, and Amazon use a consistent hashing technique. Cassandra spreads the keys of records over distributed servers by using a 128 bit hash key.

Quorum Protocol: Since systems are essentially limited to choosing two of the three parameters of consistency, availability and partition tolerance, tradeoffs are made based on cost, performance and user experience. Google’s BigTable chooses consistency over availability while Amazon’s Dynamo chooses ‘availability over consistency”. While the CAP theorem maintains that only 2 of the 3 parameters of consistency, availability and partition tolerance are possible it does not mean that Google’s system does not support some minimum availability or the Dynamo does not support consistency. In fact Amazon’s Dynamo provides for “eventual consistency” by which data become consistent after a period of time.

Since failures are inevitable and a number of servers will fail at any instant of time writes are replicated across many servers. Since data is replicated across servers a write is considered “successful” if the data can be replicated in N/2 +1 servers. When the acknowledgement comes from N/2+1 server the write is considered successful. Similarly a quorum of reads from >N/2 servers is considered successful. Typical designs have W+R > N as their design criterion where N is the total number of servers in the system. This ensures that one can read their writes in a consistent way. Amazon’s Dynamo uses the sloppy quorum technique where data is replicated on N healthy nodes as opposed to N nodes obtained through consistent hashing.

Gossip Protocol: This is the most preferred protocol to allow the servers in the distributed system to become aware of server crashes or new servers joining into the system, Membership changes and failure detection are performed by propagating the changes to a set of randomly chosen neighbors, who in turn propagate to another set of neighbors. This ensures that after a certain period of time the view becomes consistent.

Hinted Handoff and Merkle trees: To handle server failures replicas are sometimes sent to a healthy node if the node to which it was destined was temporarily down. For e.g. data destined for Node A is delivered to Node D which maintains a hint in its metadata that the data is to be eventually handed off to Node A when it is healthy. Merkle trees are used to synchronize replicas amongst nodes. Merkle trees minimize the amount of data that needs to be transferred for synchronization.

These are some of the main design principles that are used while designing scalable, distributed systems. A related post is “Designing a scalable architecture for the cloud”

24 thoughts on “Design Principles of Scalable, Distributed Systems”